Summary

Text data cleaning is an essential pre-processing step in Natural Language Processing (NLP). The goal of text data cleaning is to transform raw text data into a more structured format that can be analysed by machine learning algorithms. Here are some common text data cleaning methods in NLP:

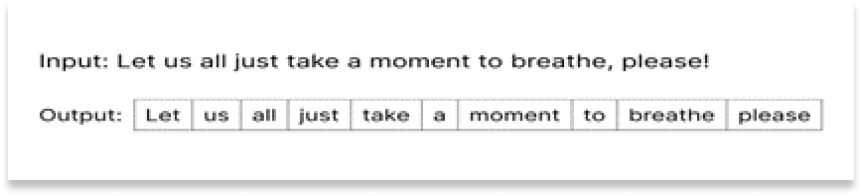

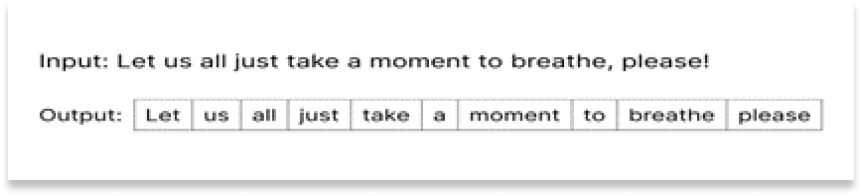

Tokenization

Tokenization is the process of breaking down a text into individual words, phrases, or symbols. This is usually the first step in NLP and helps to create a structured representation of the text. A token may be a word, part of a word or just characters like punctuation. It is one of the most foundational NLP tasks and a difficult one, because every language has its own grammatical constructs, which are often difficult to write down as rules. Programming languages work by breaking up raw code into tokens and then combining them by some logic (the program’s grammar) in natural language processing. By breaking up the text into small, known fragments, we can apply a small set of rules to combine them into some larger meaning. In programming languages, tokens are connected via formal grammars.

But in natural language processing, different ways of combining tokens have evolved over the years alongside an array of methods to tokenize. But the motivation behind tokenization has stayed the same, to present the computer with some finite set of symbols that it can combine to produce the desired result.

Stopword Removal

The words which are generally filtered out before processing a natural language are called stop words. These are actually the most common words in any language (like articles, prepositions, pronouns, conjunctions, etc) and does not add much information to the text. Examples of a few stop words in English are “theâ€, “aâ€, “anâ€, “soâ€, “whatâ€.

Why do we remove stop words?

Stop words are available in abundance in any human language. By removing these words, we remove the low-level information from our text in order to give more focus to the important information. In order words, we can say that the removal of such words does not show any negative consequences on the model we train for our task. Removal of stop words definitely reduces the dataset size and thus reduces the training time due to the fewer number of tokens involved in the training.

Do we always remove stop words? Are they always useless for us?

The answer is no! We do not always remove the stop words. The removal of stop words is highly dependent on the task we are performing and the goal we want to achieve. For example, if we are training a model that can perform the sentiment analysis task, we might not remove the stop words.

Meta-Learning Variants and its Applications

In the field of Natural Language Processing i.e., NLP, Lemmatization and Stemming are Text Normalization techniques. These techniques are used to prepare words, text, and documents for further processing.

In the field of Natural Language Processing i.e., NLP, Lemmatization and Stemming are Text Normalization techniques. These techniques are used to prepare words, text, and documents for further processing.

There is always a common root form for all inflected words. Further, degree of inflection varies from lower to higher depending on the language. To sum up, root form of derived or inflected words are attained using Stemming and Lemmatization.

Stemming:

It is the process of reducing infected words to their stem. For instance, in figure 1, stemming with replace words “history†and “historical†with “historiâ€. Similarly, for the words finally and final. Stemming is the process of removing the last few characters of a given word, to obtain a shorter form, even if that form doesn’t have any meaning.

Lemmatization:

The purpose of lemmatization is same as that of stemming but overcomes the drawbacks of stemming. In stemming, for some words, it may not give may not give meaningful representation such as “Historiâ€. Here, lemmatization comes into picture as it gives meaningful word.

Lemmatization takes more time as compared to stemming because it finds meaningful word/ representation. Stemming just needs to get a base word and therefore takes less time.

Spell checking and correction

Spell checking and correction is the process of identifying and correcting misspelled words in text data. This can help to improve the accuracy of machine learning models by reducing noise in the data. Spelling correction is often viewed from two angles. Non-word spell check is the detection and correction of spelling mistakes that result in non-words. In contrast, real word spell checking involves detecting and correcting misspellings even if they accidentally result in a real English word (real word errors).

Removing special characters and symbols

Removing special characters and symbols such as punctuation marks, hashtags, and emojis can help to simplify the text and reduce noise in the data.

Part-of-speech (POS) tagging

POS tagging is the process of identifying the grammatical components of a sentence, such as nouns, verbs, adjectives, and adverbs. This can help to provide more context to the text and improve the accuracy of machine learning models.

Normalization

One of the key steps in processing language data is to remove noise so that the machine can more easily detect the patterns in the data. Text data contains a lot of noise, this takes the form of special characters such as hashtags, punctuation and numbers. All of which are difficult for computers to understand if they are present in the data. We need to, therefore, process the data to remove these elements.

Additionally, it is also important to apply some attention to the casing of words. If we include both upper case and lower-case versions of the same words then the computer will see these as different entities, even though they may be the same.

Overall, text data cleaning is an essential pre-processing step in NLP that can help to improve the accuracy of machine learning models by reducing noise in the data and providing more structure and context to the text.