Summary

Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and manage workflows for moving, transforming, and analysing data. It is part of the Microsoft Azure suite of services and is designed to work with a wide range of data sources, including on-premises and cloud-based systems. ADF does not store any data itself. It allows you to create data-driven workflows to orchestrate the movement of data between supported data stores and then process the data using compute services in other regions or in an on-premise environment. It also allows you to monitor and manage workflows using both programmatic and UI mechanisms.

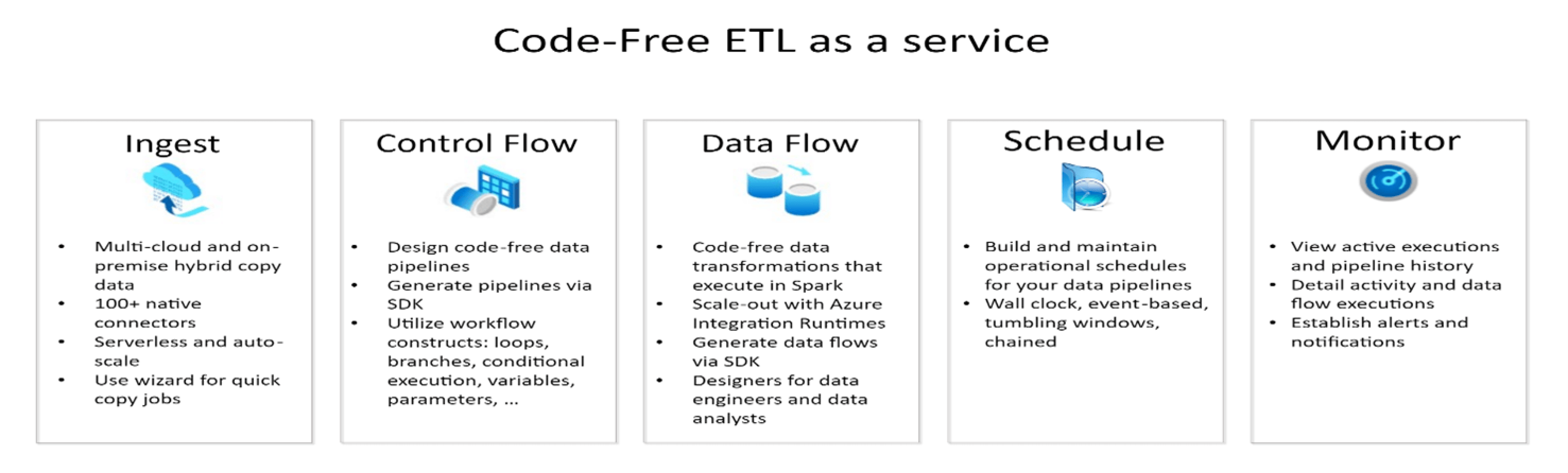

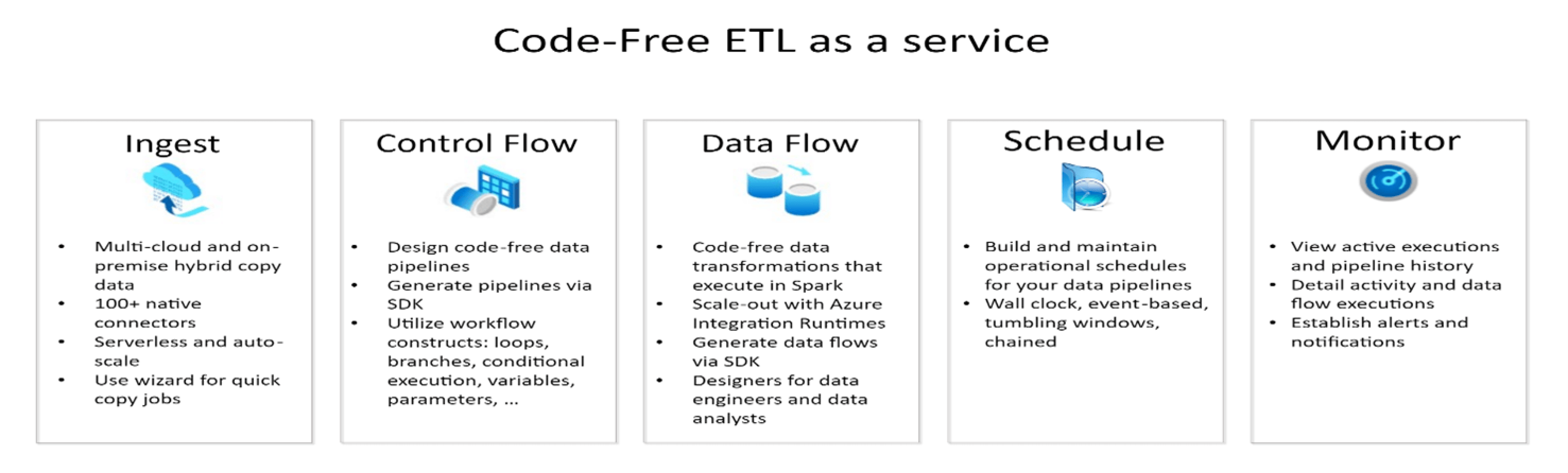

Azure Data Factory is the platform that solves such data scenarios. It is the cloud-based ETL and data integration service that allows you to create data-driven workflows for orchestrating data movement and transforming data at scale. Using Azure Data Factory, you can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores. You can build complex ETL processes that transform data visually with data flows or by using compute services such as Azure HDInsight Hadoop, Azure Databricks, and Azure SQL Database.

Features in Azure Data Factory

Data Movement: Azure Data Factory provides a range of data movement activities that allow you to copy data between different data stores, including Azure Blob Storage, Azure Data Lake Storage, SQL Server, and more.

Data Transformation: Azure Data Factory includes data transformation activities that allow you to transform data as it moves through the pipeline. You can use these activities to perform tasks such as filtering, aggregating, and joining data.

Data Orchestration: Azure Data Factory allows you to orchestrate complex data pipelines that include multiple data sources, transformations, and destinations. You can use control flow activities to manage the flow of data through the pipeline and ensure that each step completes successfully before moving on to the next.

Integration with Other Azure Services: Azure Data Factory is designed to work seamlessly with other Azure services, such as Azure Databricks, Azure HDInsight, and Azure Synapse Analytics. This allows you to build end-to-end data pipelines that leverage the capabilities of these services.

Monitoring and Management: Azure Data Factory includes built-in monitoring and management tools that allow you to track the progress of your data pipelines and troubleshoot any issues that arise.

As mentioned, Azure Data Factory also provides a way to monitor and manage pipelines. To launch the Monitor and Management app, click the Monitor & Manage tile on the Data Factory blade for your data factory.

How does it work?

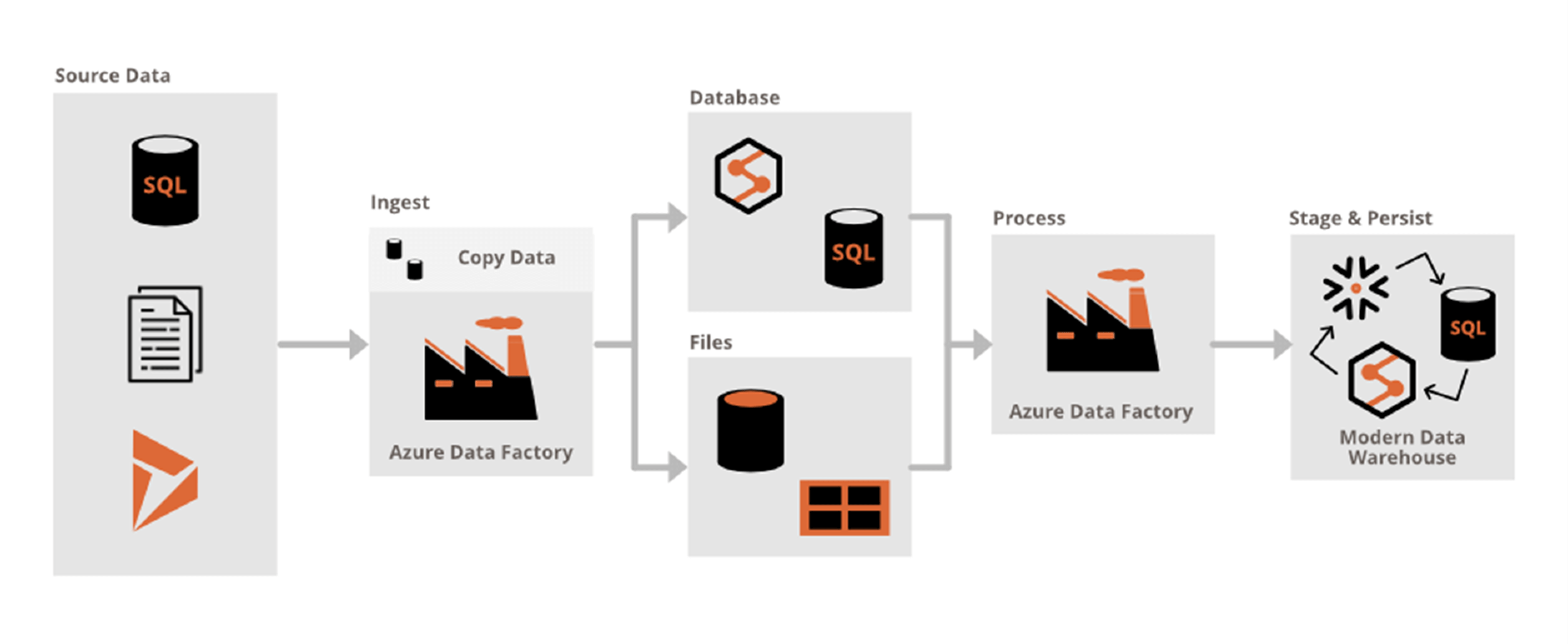

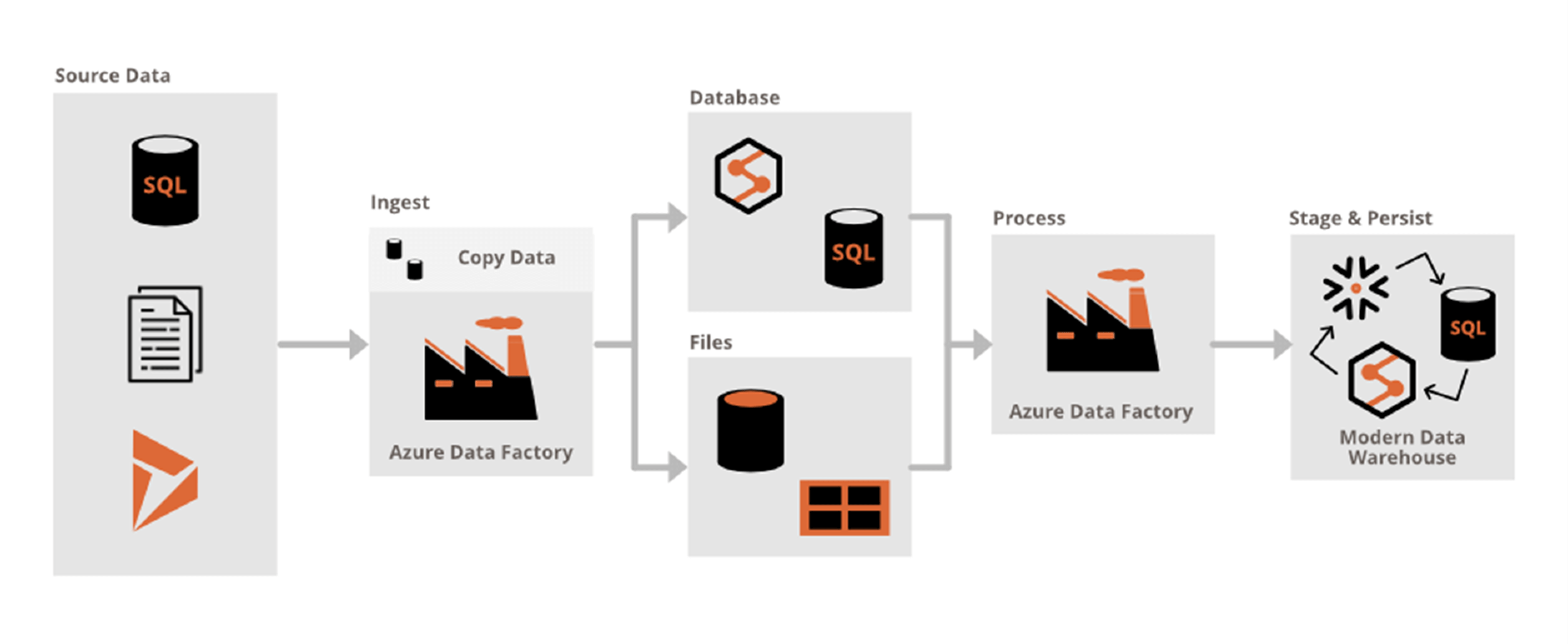

Data Factory contains a series of interconnected systems that provide a complete end-to-end platform for data engineers.

The Data Factory service allows you to create data pipelines that move and transform data and then run the pipelines on a specified schedule (hourly, daily, weekly, etc.). This means the data that is consumed and produced by workflows is time-sliced data, and we can specify the pipeline mode as scheduled (once a day) or one time.

The architecture of Azure data Factory:

The figure below describes the Architecture of the data engineering flow using the Azure data factory.

How to create a data factory in azure?

To get started with Data Factory, you should create a Data Factory on Azure, then create the four key components with Azure Portal, Virtual Studio, or PowerShell etc. Since the four components are in editable JSON format, you can also deploy them in a whole ARM template on the fly.

Azure Data Factory use cases

ADF can be used for:

•Supporting data migrations

•Getting data from a client’s server or online data to an Azure Data Lake

•Carrying out various data integration processes

•Integrating data from different ERP systems and loading it into Azure Synapse for reporting

In Data Factory, an activity defines the action to be performed. A linked service defines a target data store or a compute service. An integration runtime provides the bridge between the activity and linked Services. It's referenced by the linked service or activity, and provides the compute environment where the activity either runs on or gets dispatched from. This way, the activity can be performed in the region closest possible to the target data store or compute service in the most performant way while meeting security and compliance needs.

Overall, Azure Data Factory is a powerful tool for building, managing, and monitoring data pipelines in the cloud. It provides a range of features and capabilities that make it easy to move and transform data across a wide range of data sources and destinations.